#### 分析疫苗接种表,一针、二针、三针的数量

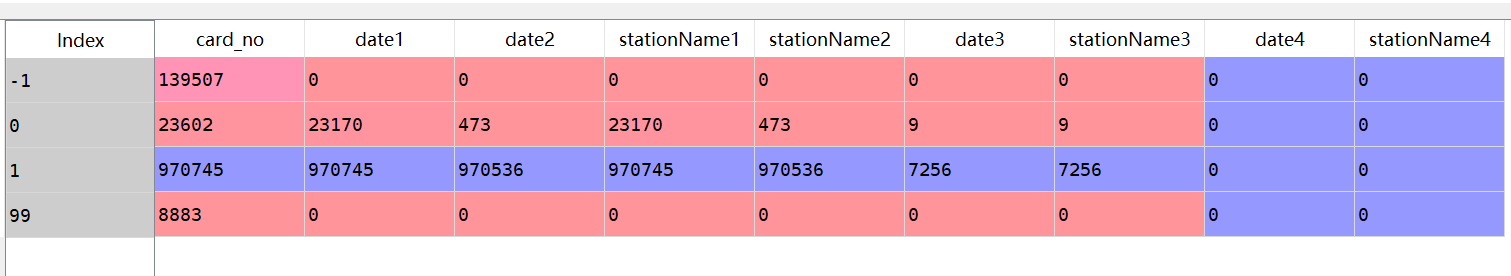

1)可以先groupby,然后count,然后赋给一个df,看这个df,一目了然(如下附图)

#### m =df1.groupby(‘flag’).count();

####

2)或者df1(‘flag’).value_counts() 函数,出简单结果

#### 常用的处理null值得函数

df.dropna(subset=[‘xian’],inplace =True)

df= df.fillna(0)

**日期的处理函数**

today=datetime.date.today()

yesterday = today – datetime.timedelta(days=1)

ori_day=today.strftime(‘%Y%m%d’)

更新随机列

df1[‘other’] = np.random.randint(0,200, size=len(df1))

df1[‘cnt’] = df1[‘cnt’]-df1[‘other’]

#### 常用的将str转化为int值得方法

df_ym_result[‘vcc_num’] = df_ym_result[‘vcc_num’].astype(int)

#### 抽取社保人员的正则匹配内容

name = re.findall(r'<s xm=”([^”]+)”‘,htmltest)

抽取常住人口返回的标记

patern = re.compile(‘CDATA\[(.+?)\]’)

yimiao_count[0] = yimiao_count[0]+1

res_text = patern.findall(htmltest)

people_info_dict ={‘card_no’:card_no}

if(len(res_text)>=23):

people_info_dict ={‘card_no’:card_no}

people_info_dict[‘XM’]=res_text[1] #姓名

people_info_dict[‘XBDM’]=res_text[2] #性别代码

people_info_dict[‘XBMC’]=res_text[3] #性别名称

#### 获取输入传参

file_index = str(sys.argv[1])

file_start_num = int(sys.argv[2])

#### 删除card_no重复的行

df2 = df1.drop_duplicates(subset=[‘card_no’],keep=’first’)# 删除重复

#### windows下绝对路径

orifile =’D:\\pybasic\\basic\\public_health_people_quchong.csv’

获取在A不在B中的数据集

“`python

#获取在df1中,而不在df2中的card_no,先转化为set,然后直接相减

df1_cardno_list = df1[‘card_no’].drop_duplicates().values.tolist()

df2_cardno_list = df2[‘card_no’].drop_duplicates().values.tolist()

df1_cardno_set = set(df1_cardno_list)

df2_cardno_set = set(df2_cardno_list)

cardno_alone_set = df1_cardno_set – df2_cardno_set #在df1 不在 df2中

cardno_alone_list = list(cardno_alone_set)

c={“card_no” : cardno_alone_list}

#将列表c转换成字典

c_df=pd.DataFrame(c)#将字典转换成为数据框

“`

#### 获取id等于mm的行索引,两种方法

df3[df3[‘card’]==mm].index.tolist()[0]

df3[df3[‘card’]==mm].index.values[0]

**************************************************************************************************

#### 常用去重函数,修改列名称函数

#df2.columns= [‘card’,’xm’,’phone’,’nian’]

df3.rename(columns={‘card’:’card_no’},inplace = True)

df1 = df1.drop_duplicates(subset=[‘card_no’],keep=’first’)# 删除重复

#### 条件筛选

#df2.loc[((df2[‘flag’]==1) & (df2[‘date3’]!=0)), ‘kind’] = ‘3p’

#df2.loc[((df2[‘nian’] < ‘1982’) & (df2[‘nian’] >= ‘1962’)), ‘AgeGroup’] = ’40-60岁’

#### 时间日期处理

“`python

df1[‘date’] = pd.to_datetime(df1[“testTime”], format=’%Y-%m-%d’)

df3 = df1[df1[‘isTest’]==1]

mt =datetime.strptime(dateedge+’ 12:00:00′,’%Y/%m/%d %H:%M:%S’)

df3 = df3[df3[‘date’]>(mt)]

print (“只看”+dateedge+”后得 行数为:”+str(df3.shape[0]))

“`

#### str截取和拼凑

df2[‘newcode’] = df2[‘card_no’].str.cat(df2[‘testTime’])

d_df[‘time’] = d_df[‘testTime’].str[11:19]

#### readcsv读取时指定类型

df3 = pd.read_csv(mainfile,encoding=’utf8′,dtype ={‘phone’:’str’})

#### Hash MD5转化

“`python

import hashlib

def transf(str0):

md5 = hashlib.md5(str0.encode(“utf-8”))

# md5 = hashlib.md5(str0)

return(md5.hexdigest())

ori = open(‘ori55.txt’,’r’,encoding=’utf-8′)

out = open(‘output56.txt’,’w’,encoding=’utf-8′)

for i in ori:

i = i.strip()

print (i)

out.write(i+’,’+transf(i)+’\n’)

ori.close()

out.close()

“`

#### 分割大文件

“`python

filename =’ph_card_xm_torun.csv’

reader = pd.read_csv(filename, iterator=True, chunksize=12000,encoding=’utf8′) # 每50000条数据为一个chunk

# 需要存的file

head,tail = os.path.splitext(filename)

print (“read ok”)

i = 1 #定文件名

for chunk in reader:

#chunk.rename(columns={‘card’:’card’,’xian’:’xian’},inplace=True)

chunk1 =chunk[[‘D_SFZH’,’D_XM’,’D_LXDH’]]

chunk1.columns=[‘card’,’xm’,’phone’]

chunk1.to_csv(‘{0}_{1}{2}’.format(head, i, tail),columns=[“card”,”xm”,”phone”],index=False,encoding=’utf8′)

print(‘保存第{0}个数据’.format(i))

i = i+1

“`

正则匹配

“`python

data_list =(json.loads(htmltest))[‘data’][‘list’]

for i in range(len(data_list)): #对于每一页,抓取其中每篇文章的ID

time.sleep(0.5)#延时一下,防止被封

url_list.append(data_list[i][‘url’])

time_list.append(data_list[i][‘publish_time’])

tmp =data_list[i][‘item_id’] #拿到每一篇文章的ID

b_url = ‘https://boot-source.xuexi.cn/data/app/’+tmp+’.js?callback=callback&_st=1616990163257′ #利用ID,再去爬单篇文章的页面

headers={‘user-agent’: ‘Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/87.0.4280.141 Safari/537.36’}

r2 = requests.get(url= b_url, headers=headers)

r2.encoding = ‘utf-8’

htmltest2 = (r2.text)[9:-1] #清洗数据,不用管

text_single = (json.loads(htmltest2))

title = text_single[‘title’]

content = (list(re.findall(r”>(.*?)<“,text_single[‘content’]))[0])#把文本中没必要的<p>干掉

title_list.append(title) #将所有的title,存到一个list里

content_list.append(content) #将所有的content,存到一个list里

if __name__ == '__main__':

main()